Sommaire

Introduction

Recent advances in generative artificial intelligence, exemplified by tools such as ChatGPT, Bard, and Claude, have profoundly transformed how we design and produce content. From text to images, and from video to audio, AI-assisted creations are now ubiquitous in communication, marketing, and production strategies. Yet behind this technological revolution lies a critical legal question : who actually owns this content ? Copyright law, designed for human-made works, faces a new paradigm. Understanding the applicable rules, the risks, and the measures to be implemented is now a strategic priority for companies, creators, and institutions seeking to combine innovation with legal compliance.

Legal framework for ai-generated content

Only human creation is protected

Under French law, Article L.111-1 of the Intellectual Property Code specifies that only the author, defined as a natural person, can claim protection for a work of the mind. This requirement of a “personal imprint” automatically excludes works generated exclusively by an algorithm. An image, text, or musical composition produced without significant human intervention does not meet the required originality criterion. Case law and doctrinal positions confirm this interpretation, aligned with EU Directive 2001/29/EC and WIPO guidelines. AI, as a mere tool, cannot be granted authorship status.

Transparency obligations and legislative developments

While current law does not recognize AI as having creative autonomy, regulations are evolving to govern its use. Directive (EU) 2019/790 already provides exceptions for text and data mining, while safeguarding protected works. The forthcoming AI Act, now in its final stages, will require AI providers to document and publish summaries of protected data used to train their models. This obligation aims to enhance traceability and reduce the risk of unlawful reuse of pre-existing works, giving rights holders a new means of control.

Authorship and ownership of copyright

User or developer : who is the author ?

Determining authorship in the context of conversational AI depends on the nature and scope of human intervention. If the user formulates precise prompts, refines the outputs, and incorporates substantial creative choices, they may claim ownership of the original parts of the work. Conversely, the AI developer retains rights to the software, architecture, and code, but not to the specific outputs. This distinction, enshrined in doctrine and contractual clauses, is essential to avoid confusion over the intellectual property of generated content.

The decisive role of terms of use

The terms of use of AI platforms are crucial in allocating rights. For example, OpenAI’s terms state that the user owns the rights to the outputs, provided they comply with applicable laws, including copyright. However, such clauses do not exempt the user from liability in the event of third-party rights infringement. Extra caution is therefore needed in commercial exploitation to ensure that generated content does not reproduce, even partially, a protected work.

Legal risks of using a chatbot

Risks of direct or indirect infringement

Using a chatbot does not shield one from liability for infringement. Generated content may, intentionally or not, reproduce all or part of an existing protected work. Copying a literary text, a musical passage, or a protected visual even in modified form can constitute a violation of rights. This risk is heightened by AI’s ability to memorize and output fragments learned during training. Businesses should implement systematic verification procedures before public release.

Training data and pre-existing works

AI models are trained on massive datasets, sometimes including protected works collected without explicit authorization. This practice raises major legal issues, particularly concerning reproduction and public communication rights. The lawsuit brought by The New York Times against OpenAI and Microsoft perfectly illustrates this problem: the newspaper claims its editorial content was used without a license to train their models. Such actions are likely to increase as rights holders become aware of the use of their works.

Strategies to secure the use of ai content

Creative and Documented Human Intervention

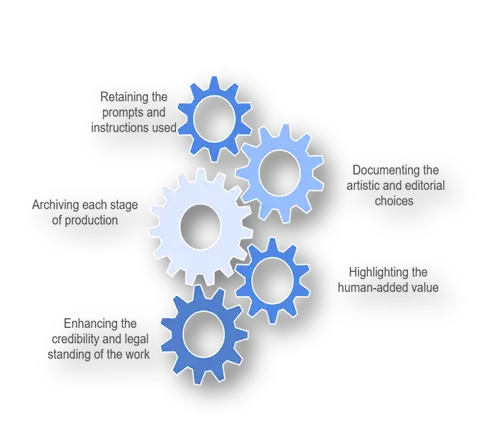

To qualify for copyright protection, the user must demonstrate a genuine creative contribution. This requirement entails :

- Retaining the prompts and instructions used to generate the content, in order to trace the creative process.

- Documenting the artistic and editorial choices (selection, modifications, additions) made to the AI-generated output.

- Archiving each stage of production to establish a chronological record of the work undertaken.

- Highlighting the human-added value compared to mere automated AI production.

- Enhancing the credibility and legal standing of the work through a complete file that can be produced in the event of a dispute.

Contractual clauses and compliance audits

Contracts with service providers or partners should include precise clauses governing AI use. Recommended provisions include warranties of non-infringement, transparency obligations regarding the origin of content, and a clear allocation of responsibilities. Regular audits, particularly using similarity detection tools, help secure distribution and avoid costly disputes. This is especially relevant in sectors with high creative intensity.

International perspectives and legislative developments

Divergent approaches across jurisdictions

In France and the European Union, the requirement for human originality is non-negotiable. In the United States, the US Copyright Office refuses to register works generated without significant human contribution. However, some countries, such as the United Kingdom and India, are exploring hybrid regimes where the programmer may be recognized as the author. These divergences complicate the international management of rights, forcing businesses to adapt their strategies by jurisdiction.

Upcoming reforms and impact on users

The forthcoming EU AI Act will mark a turning point by imposing transparency obligations regarding training data and regulating high-risk AI systems. Meanwhile, WIPO is conducting consultations to propose a harmonized international framework, potentially introducing new forms of protection adapted to AI. These reforms, although adopted but not yet fully applicable, could profoundly alter the way AI-generated content is exploited and protected, prompting stakeholders to anticipate their future obligations now.

Conclusion

Protection for AI-generated content remains contingent on substantial and identifiable human creative input. Businesses and creators must integrate this requirement into their processes, combining documentation, verification, and contractual safeguards. The issue is not only legal but also central to the economic value of works and the management of associated risks.

Dreyfus & Associés is in partnership with a global network of intellectual property lawyers

Nathalie Dreyfus with the support of the entire Dreyfus team

FAQ

1. Is content generated by ChatGPT automatically protected by copyright ?

No, only a work with original human contribution can qualify for protection.

2. Who owns the rights: the user or the AI developer ?

Generally the user, unless otherwise stipulated in the terms of use or a specific contract.

3. What are the main copyright risks associated with chatbots ?

Unauthorized reproduction of protected works or use of training data covered by rights.

4. Are the rules the same in Europe and the United States ?

No, both require human involvement, but with differing criteria and practices.

5. What clauses should be included in a contract to regulate AI use ?

Clauses on ownership, non-infringement warranties, transparency, and allocation of responsibilities.