Sommaire

Introduction

Artificial intelligence (AI) has become an indispensable tool in communication and advertising. Capable of drafting texts, generating visuals, imitating voices, or even creating virtual characters, AI is transforming the way companies design their campaigns and interact with their audiences. In the field of influencer marketing, these technologies expand creative possibilities: automated recommendations, personalized messaging, virtual influencer avatars, and content tailored to each user profile.

However, this technological revolution comes with significant legal risks. Who owns the copyright for AI-generated creations? How can the transparency and authenticity of an advertisement produced without direct human involvement be ensured? And what obligations now apply to trademarks and influencers under the emerging regulatory framework governing digital communications?

The legal challenges of AI-generated or AI-assisted advertising creation

The issue of copyright ownership

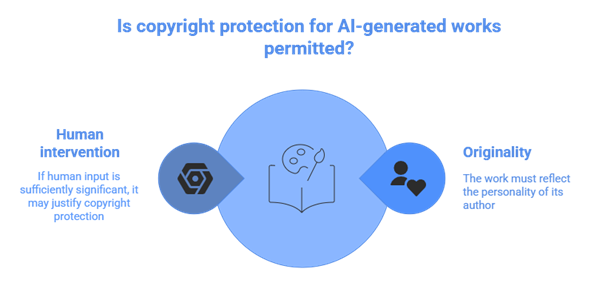

Under French law, copyright protection depends on the originality of the work and the personal imprint of its author. This principle, reflected in the Directive 2001/29/EC and the French Intellectual Property Code (Articles L111-1 et seq.), implies that a purely machine-generated creation cannot be protected, as AI has no legal personality and cannot claim authorship.

The situation becomes more complex when a human partially intervenes, for example, by settling the prompt, selecting a model, or editing the result. The prevailing legal view amongst law specialists is that if human input is sufficiently significant, it may justify copyright protection. In such cases, the extent of human creativity determines whether the work qualifies as an original intellectual creation.

For further insights on the link between copyright law and artificial intelligence, we invite you to consult our previously published articles on AI-generated creations and the legal risks arising from their use.

Risks of infringement and violation of image rights

Generative AIs are trained on vast datasets often containing copyrighted works. Their use can lead to partial reproductions of existing elements without authorization. An advertisement using such outputs could therefore constitute an act of counterfeiting.

Similarly, if an AI generates a face, voice, or body resembling a real person without consent, it may infringe image rights or their right to privacy. In influencer marketing, where authenticity and personality are central, such misuse can damage both the trademark’s reputation and consumer trust.

The role of the European AI Act: a structural framework for digital advertising

A pioneering text regulating AI within the European Union

Adopted in 2024 and to be fully implemented by 2026, the AI Act is the world’s first comprehensive legislation regulating artificial intelligence based on a risk-based approach. It establishes four levels of risks : unacceptable, high, limited, and minimal, and sets out specific obligations depending on the purpose and impact of each AI system.

Direct impact on advertising and influencers

Generative AI systems used to create advertising content fall under the “limited-risk” category but are subject to enhanced transparency requirements. Under Article 50 of the AI Act, both providers and users must:

- Clearly indicate when content is generated by artificial intelligence ;

- Disclose any significant alteration or manipulation of reality (voice, image, video);

- Implement safeguards against misinformation and opinion manipulation;

- Maintain documentation on the datasets and sources used to train the artificial intelligence model used.

Consequently, companies and influencers will need to adapt their practices to this new regulatory framework by integrating these obligations into their production workflows and compliance policies.

Towards shared responsibility among stakeholders

Article 25 of the AI Act introduces a shared responsibility regime amongst developers, providers, and deployers of AI systems. In the advertising sector, this entails greater traceability and accountability, identifying which artificial intelligence tools were used, under what conditions, with which type of data, and under which degree of human oversight.

This approach complements the logic of the GDPR and reinforces the need for robust legal governance of AI in commercial communication.

The legal obligations of companies and influencers in the age of AI

Transparency and identification of advertising content

Transparency obligations remain central under both French and EU law. Following the introduction of French article 5 of Law No. 2023-451 of 9 June 2023 (law aimed at regulating commercial influence and combating abuses by influencers on social media), any commercial communication must be clearly identifiable as such. Consequently, advertisements must explicitly indicate when they result from AI-generated content.

Campaigns that fail to make such disclosure may be deemed misleading commercial practices, thereby engaging the liability of both influencers and advertisers., exposing both the company and the influencer to civil and criminal liability. Companies should therefore adopt a transparency policy ensuring that all AI-assisted content carries clear, visible mentions distinguishing human from artificial communication.

Data protection and advertising profiling

AI-based advertising tools often rely on extensive processing of personal data, including browsing history, location, interests, and purchasing behaviour. Such processing must comply with the General Data Protection Regulation (GDPR).

Accordingly, companies must:

- Obtain explicit user consent to use their personal data;

- Limit private data collection to what is strictly necessary;

- Provide clear information on the purposes of processing personal data;

- Regulate the use of automated decision-making and profiling systems.

Every AI-driven advertising campaign must therefore incorporate GDPR compliance from the design stage onward.

Conclusion

Artificial intelligence is redefining advertising creation and influencer marketing, but it also introduces a complex web of legal responsibilities. Key challenges include intellectual property protection, advertising transparency, data protection, and compliance with the AI Act.

Companies must take a preventive and strategic approach, auditing their AI tools, documenting their use, and implementing internal governance mechanisms to ensure compliance. Only under these conditions can AI become a regulated innovation rather than a legal risk.

Dreyfus & Associés assists its clients in managing complex intellectual property cases, offering personalized advice and comprehensive operational support for the complete protection of intellectual property.

Nathalie Dreyfus with the support of the entire Dreyfus team

Q&A

1. Must AI-generated advertisements undergo legal review before publication?

Yes. It is strongly advised to perform a comprehensive legal audit before publication, including verification of third-party rights, GDPR compliance, mandatory transparency notices, and the absence of any unauthorized reproductions (logos, trademarks, or artistic works).

2. Can an AI-generated image of a person violate image rights?

Yes. If the AI-generated image resembles or imitates a real person without authorization, it may infringe image rights or privacy, and in commercial contexts, could amount to unfair competition or parasitism.

3. What are the legal risks of undisclosed AI use in advertising?

Failure to disclose the use of AI may constitute a misleading commercial practice, exposing the advertiser and influencer to civil, criminal, and administrative sanctions.

4. How can companies regulate AI use by their partners?

By including dedicated clauses in contracts that specify rights ownership, responsibilities, GDPR compliance, mandatory transparency mentions, and pre-publication validation procedures.

5. How can companies prepare for the AI Act’s entry into force?

They should:

- Identify all AI tools used in advertising;

- Document their purpose, providers, and operational parameters;

- Include clear AI-related disclosures in all content;

- Establish internal governance and regulatory monitoring systems.

This publication is intended to provide general guidance to the public and to highlight certain issues. It is not designed to apply to specific situations, nor does it constitute legal advice.